Why Python Is Your Best Data Analysis and Management Companion

Enterprise data forms the foundation of all business operations in companies across industry types. The quest to generate, gather, and process as much data as possible to gain even the minutest competitive edge in some form or other has led to the phenomenon of Big data.

But this Big data can become a source of pain if it isn’t managed well and turned into an intelligible, actionable, and valuable asset.

This seemingly-simple need has made the data management market worth US$ 82.25 Billion in 2021, with a CAGR till 2030 expected to hover around 14%. Data analytics forms a big portion of this data management.

It is through the analysis of the available enterprise data that companies can make sense of it. The process is a complex one, though, requiring the input of experts using at least one programming language.

Python has become a favorable candidate here, with data analysts always figuring out how to use Python for data analysis in new and innovative ways.

The simplicity of Python, coupled with the vast network of enthusiasts, has been the force driving this merger between it and data analysis.

Read on to learn how Python is used for data analysis and why it deserves to be, and how it helps with data cleansing as well.

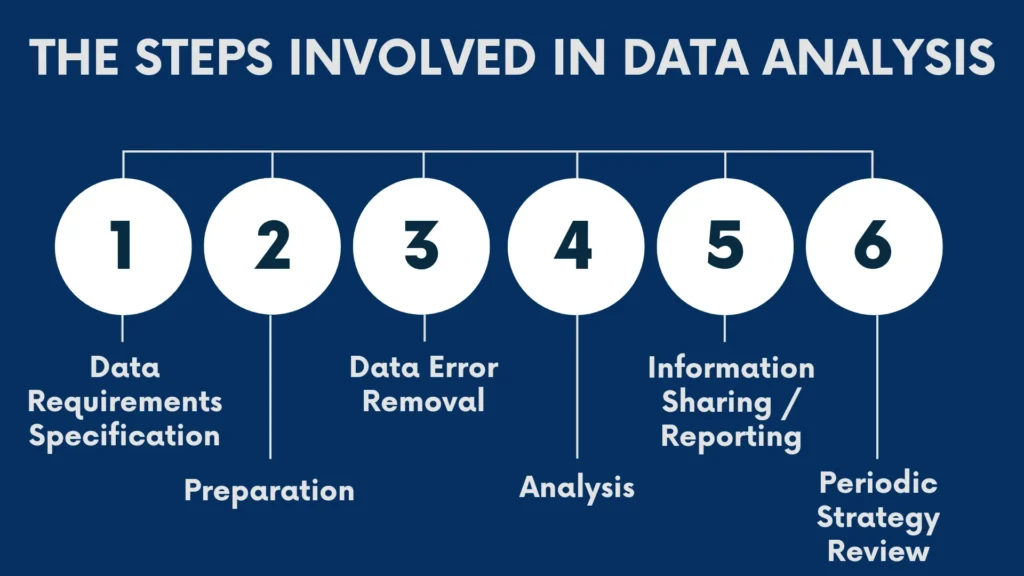

The Steps Involved in Data Analysis

A data analyst has the privilege of opting for any field since data is ubiquitous across sectors. They can employ a host of methods to come up with the intended solution, either by solely using their discretionary inputs or mixing their expertise with the standards of the client company.

While there is much room for creating customized analysis solutions, some common steps are followed everywhere.

1. Data Requirements Specification

Business problems come in a variety of avatars, and data analysts must be able to provide a solution through their work for them.

This requires that the analyst is well aware of the root of the problem since the client will have invested a lot of money and other resources into data analysis because of the complexity and scale involved.

If there is any ambiguity on the analyst’s part that goes unaddressed, the process won’t yield the desired results with high accuracy.

There should be clear communication between the analyst you’ve hired and you to ensure that no knowledge gaps regarding the project exist. You can ascertain this by asking questions to the analyst about the topics discussed.

The atmosphere must be one where the analyst also feels free to ask you about certain aspects of the project, and you should give them satisfactory answers. Ask if they will use Python for data analysis since it has been shown to yield good results quickly.

Ideally, this querying session should occur before the start of the project, with no further need for discussions over topics already covered. However, you can expect issues to crop up during the process, which the analyst may bring your way.

Once done, the answers to the questions regarding what the problem is about, the data required for the analysis, and the applicable solutions they can provide should be available.

2. Preparation

This is the stage where the data required for the analysis is obtained and stored after creating a viable and lucrative data management strategy.

It usually falls upon the analyst to collect the necessary data for solving the problem from multiple applicable sources. However, this is not a hard and fast rule, and you could hire a dedicated data management company too for the task.

The data can be classified broadly as internal and external. Internal data is the data that is generated by sources within the company, such as ERP software. External data is data obtained from sources outside the company, such as the world wide web.

The analyst or another expert may use python for data mining, too as the language is conducive to completing operations quickly and easily. This gives you the speed and cost advantage that’s crucial to gain a market edge.

The data can be stored in a variety of formats according to the specific needs of the project. Specific categories may be created, along with format standards that best suit the company’s needs.

The organization’s IT infrastructure demands are also assessed during this stage and worked upon so that there won’t be any disruptions from this aspect of the project.

You should work with your analyst in determining these demands, especially if you’ve chosen to have it done in-house. Opting for a third-party cloud service will serve you well here due to the flexibility and cost-effectiveness it offers.

3. Data Error Removal

It is best to run that data through a series of functions to remove data errors of all types, as they are inevitable. The functions include data standardization, data normalization, data deduplication, and data validation, among others.

They each address specific types of data errors, so it’s best to always have the full roster of them performed on your data. Your hired data analyst can perform these functions, or you could let the external data management services provider do it.

Ascertain the same when you’re hiring your data analyst. If so, confirm whether they’ll be using Python for data cleansing, as it helps make the process more accurate and efficient.

This step aids a data analyst by making it easy to find trends and relationships in your enterprise data. An example is when the analyst is trying to figure out how to use python for statistical analysis.

The deviations in data points can be more accurate if the data values to be entered into the commands are of high accuracy too.

4. Analysis

This step is the meat of the operation. Here, the analyst works on the available enterprise data and finds any trends and patterns in it for better comprehension. Plenty of calculations are involved here, and some data may be combined with another to give the right data set for the process.

The analyst has a choice of tools they can use for the task, with the most common ones being SQL and Excel spreadsheets-based.

This is because the tools contain a flurry of excellent built-in functions for the process, such as pivot tables in excel for performing calculations.

However, programming languages offer a more flexible way of obtaining analytical solutions due to their customizability.

The simplicity and vast capabilities of Python make it a go-to choice for this purpose. If analyst knows how to use Python to analyze data on an advanced level, then they can swiftly create and implement the algorithms for analysis without loss in accuracy.

5. Information Sharing / Reporting

Report generation and disbursal are as important a part of the data analysis process as the analyzing portion. Without it, you won’t know what the result of the project is, and how to make important business decisions that will benefit your company.

Data analysts share the information obtained from their work primarily via visualizations. This is in the form of charts and graphs, as they help simplify complex numbers and add context that may not be perceived otherwise by respective stakeholders.

The beauty of Python is that it comes of use during visualization, too, just like with data analysis. There are packages available in the language that help create stunning and elaborate visualizations very easily.

It is one of the reasons why one has to use Python for data analysis. With just a few simple commands, you can have a clear representation of the underlying insight of the data that has been analyzed, with real-time animation, too, if necessary.

6. Periodic Strategy Review

Every data analysis strategy has a value period post in which it gives fewer returns on the effort put into it. This is due to shifting market dynamics and customer tastes, and the same is reflected in the data.

Proceeding with a fixed strategy for analyzing this data will result in an incorrect interpretation of it. This is why it is necessary to review your data analysis strategy periodically and alter it to the changing needs of your market.

This also includes changes to the data preparation process. You need to account for not only the quality of data but also its quantity as you are likely to add more with time as you scale.

This situation could involve you having to retrain your in-house team of data analysts if you’re not outsourcing the task. Then you must ensure that they become aware of the most efficient methods and tools for the purpose, especially learning to use Python in data analysis to match the new requirements.

Why Python Is Well-suited for Data Analysis

To understand the role of Python in data analysis, we need to take a look at its origins and some of its features that make it suitable for the task. Python is a programming language that belongs to the category of Object Oriented Programming (OOP).

Oops, are called so because they create what are called “objects” with certain behaviors and attributes that can be defined and controlled by the code. The Python language was created in 1990 but has gained popularity in the last decade.

It is a high-level language, meaning it needs to be converted to machine-level code for a computer to run it. This is done using a compiler. High-level languages are very helpful for users as they make it easy to write commands while offering a wide range of them for multiple scenarios.

This feature makes it excellent for data operations like analysis and cleaning because it offers a great amount of control. It also offers a high level of security as access to the objects that contain sensitive data can be restricted within the code.

Below are more reasons that make Python a go-to choice for data analysis:

1. It’s Easy to Use and Learn

Python comprises simple versions of all the commands used in other high-level OOPs. The editor/compiler application used to write the programs handles most of the technical heavy lifting, making writing code as simple as writing ordinary sentences.

This is beneficial on two levels. One is it makes the language easy to learn. Anyone with a basic understanding of programming languages can quickly pick up on it and become fairly well-versed within a short duration.

Two, it makes the language easy to use since there is a good level of expertise and simple command lines. So, even if the application has large demands, such as with data analysis, Python can be applied to make the entire process less cumbersome and more efficient.

The ease-of-learning factor also enables corporate trainers to quickly upgrade employee skill levels. This is essential if you want your in-house data analysis team to be up to the task of handling new projects that can come your way at a moment’s notice.

2. It Is Open Source

Python has been developed under an OSI-approved open-source license. This makes the language free for use, even for commercial purposes. Python’s open-source nature is behind its popularity, as it enables more people to adopt it without worrying about licensing fees and litigious circumstances surrounding it.

You can adapt the language any way you want to better suit your analysis project’s needs. It can be distributed according to your will, so there is no need to worry about violating any laws there as well.

3. There Is a Global, Large Support System

Python’s popularity has led many developers and enthusiasts to use it for various applications worldwide. They continuously interact and communicate through various means like online forums and dedicated social media groups.

This means you don’t have to worry about getting stuck on some part of your data analysis project since there is a high likelihood that someone has worked on that particular problem and posted a Python-based solution in one of the communication channels.

If there isn’t one, you’re not out of luck either. The enthusiasm that Python lovers share also helps with them giving you custom solutions in forums or elsewhere to your particular problems.

Your team of analysts can simply post the issue online, and they are likely to get an answer in some form or the other. Since this is global, that answer tends to come from an expert and quickly, as there won’t be the issue of time zones.

They could even help someone learn how to use Python to perform data analysis better.

4. It Has a Wide Range of Libraries

Programming languages win or lose depending on the range of library functions they have on offer and how easy it is to use them. Python has got your back in this regard, as it comes with all the library functions you could ask for.

What’s better, there are library functions specifically dedicated to data analysis and data cleaning available for use. These come from the original Python developers and from the vast community of Python developers worldwide.

The open-source nature of the language also helps here as it makes these libraries free to use and change according to your needs. The support structure for the language also gives you variations of these libraries as they are constantly being developed and updated by the community with new features for greater convenience.

5. Easy Scaling

Scaling is a bottleneck for companies as much as it is desired for growth. One of the solutions to this conundrum is to eliminate the bottleneck aspect with the use of Python during data analysis.

The language is easily scalable and flexible enough to be used for multiple projects across a company’s operations. The availability of a large set of library functions means you can incorporate code easily to form large files without adding more delays and expenses.

You can relax about your ROI requirements as the easy scaling helps bring rewards with comparatively little in the way of investments. It helps you move ahead with your scaling plans more confidently as there is lowered chance of risk and better awareness about it, too, along with nearly on-hand solutions to probable problems that may arise along the way.

Some Cons of Using Python for Data Analysis

As great as Python is for data analysis and other data management functions, it has its share of shortcomings. You need to be aware of these since they can affect the output of how you use Python in data analysis.

1. It’s a General Purpose Language

Python is designed to be a jack-of-all-trades sort of language so that everyone can use it for any task. While this makes it helpful for data analysis and data management at large, you cannot rely on it to be able to do all associated tasks.

Your analysis team or those in your outsourcing agency will have to modify it to better suit your specific project requirements.

For example, they will have to either create or import libraries that best apply to your needs, and tasks like these take time and other resources.

The amount taken depends on the scale of the project, the experience of the team members, and the requirements of the project, along with the amount of those resources you can spare quickly.

A shortfall in any of these could take the analysis and other associated functions longer than expected to complete.

2. Expertise Can Be Expensive

Since data analysts have a plethora of options, albeit not as good as Python, to conduct their data analysis, the ones using Python become a niche. Gaining one for your in-house team who has the kind of experience and skill level you need for your purposes can turn out to be expensive.

Outsourcing helps greatly here as the economies of scale and currency exchange values bring down the cost per person doing the job. While the software tools may be inexpensive due to their open-source nature, the hardware equipment required to run the complex analysis can hit your budget when you go in-house.

3. Competition Is on the Horizon

The rise of Big data led to Python’s creators adopting it for the new purpose of its analysis and management. However, others have created dedicated languages just for this purpose, along with all the bells and whistles you could need.

Scala is one such example, with the language and its framework Spark being often used for large-volume databases. It can be easily embedded in enterprise programs and runs on the popular Java Virtual Machine.

It is faster than Python during looping. It is an alternative to using Python for data analysis.

How Python Is Used for Data Analysis and Data Cleansing

1. Data Analysis

Python finds use in data analysis in the following ways.

#. Data Mining

An engineer can use libraries like Scrapy and BeautifulSoup to mine data from many websites. Scrapy helps build unique programs to collect structured data and APIs. The other is used when it’s difficult to retrieve data from APIs. Thus, it helps scrape and arrange data in the preferred formats.

#. Data Modeling and Processing

Python has the NumPy and Pandas libraries that are meant for data processing and modeling. NumPy helps arrange large data sets, making mathematical operations and vectorizations of the same on data arrays easier.

Pandas help convert data to one of the many data frames it offers that allows for the deletion or addition of new data into columns and performs numerous operations.

#. Data Visualization

Python can help with great data visualization with the Matplotlib and Seaborn libraries. They can be used to create a variety of graphs, pie charts, histograms, heatmaps, and other visualizations that are easy to understand and pleasing to look at.

2. Data Cleansing

Using Python for data cleansing is recommended because of the following advantages it offers:

Conclusion

Data is the gift that allows organizations to maintain a competitive edge and make their operations more efficient. However, this is possible only if you keep that data in the best shape possible and work on it via the best data analysis methods.

It is here that the answer to the question of why to use Python for data analysis becomes clear; you get multiple benefits like cost-competitiveness, accurate results, and great visual representations of it, along with easy performing of associated functions like data cleansing.

It will give your company the boost in data use it needs to fulfill its customer and objective obligations.

Author Bio

Jessica is a Content Strategist, currently engaged at Data-Entry-India.com- a globally renowned data entry and management company -for over five years.

She spends most of her time reading and writing about transformative data solutions, helping businesses to tap into their data assets and make the most out of them.

So far, she has written over 2000 articles on various data functions, including data entry, data processing, data management, data hygiene, and other related topics.

Besides this, she also writes about eCommerce data solutions, helping businesses uncover rich insights and stay afloat amidst the transforming market landscapes.